ChatGPT: Optimizing Language Models for Dialogue

What to do if you have 15Min time to spare? Feed ChatGPT with prompts to write an introductory article about ChatGPT. And I promise, this is the only part which is not based on a Large Language Model. Everything else was written by ChatGPT. For better readability I replaced the prompts with simple headers.

What is ChatGPT?

Are you tired of boring, robotic chatbots that can’t hold a conversation or understand your needs? If so, you’ll be excited to hear about ChatGPT, the latest and greatest in AI language models. In this blog post, we’ll take a closer look at ChatGPT, its capabilities, and why it’s a major step forward for AI. So if you’re interested in the future of conversational AI, keep reading!

ChatGPT is a new AI language model developed by OpenAI that has the ability to understand and respond to conversational inputs in a natural and engaging way. This is a major step forward in the field of natural language processing, as it allows for more effective and engaging communication with AI systems. In this blog post, we’ll take a closer look at ChatGPT and its capabilities.

One of the key capabilities of ChatGPT is its ability to understand and respond to a wide range of conversational inputs. This includes both simple requests and more complex questions, making it a powerful tool for a variety of applications. ChatGPT has also been trained on a massive amount of data, allowing it to have a deep understanding of natural language and the ability to generate human-like responses.

How is ChatGPT related to GPT3?

ChatGPT and GPT3 are related in that ChatGPT is a variant of GPT3 that has been specifically designed for conversational AI applications. GPT3, also known as Generative Pretrained Transformer 3, is a large language model trained by OpenAI. It is capable of generating human-like text and has been used for a wide range of natural language processing tasks, including language translation, summarization, and question answering.

ChatGPT is based on GPT3 and shares many of its capabilities, including its ability to generate human-like text. However, ChatGPT has been trained on a large amount of conversational data, allowing it to understand and respond to conversational inputs in a natural and engaging way. This makes it a powerful tool for conversational AI applications, such as virtual assistants and chatbots.

In summary, ChatGPT and GPT3 are related in that ChatGPT is a specialized variant of GPT3 that has been designed for conversational AI applications. While both models share many similar capabilities, ChatGPT has been specifically trained and optimized for conversational AI tasks.

What happens when ChatGPT4 will be published in the next few months?

While ChatGPT is an impressive development in AI, it is still only a teaser for what is to come with GPT4. GPT4 is the next generation of the GPT language model, and is expected to be even more powerful and capable than ChatGPT. With GPT4, we can expect even more advanced natural language processing capabilities, as well as the ability to handle a wider range of tasks and applications.

Overall, ChatGPT is a major step forward for AI and natural language processing, but it is only a glimpse of what is to come with GPT4. As we continue to advance in the field of AI, we can expect even more impressive developments and capabilities in the near future.

How does the collection of data and reward model in ChatGPT work?

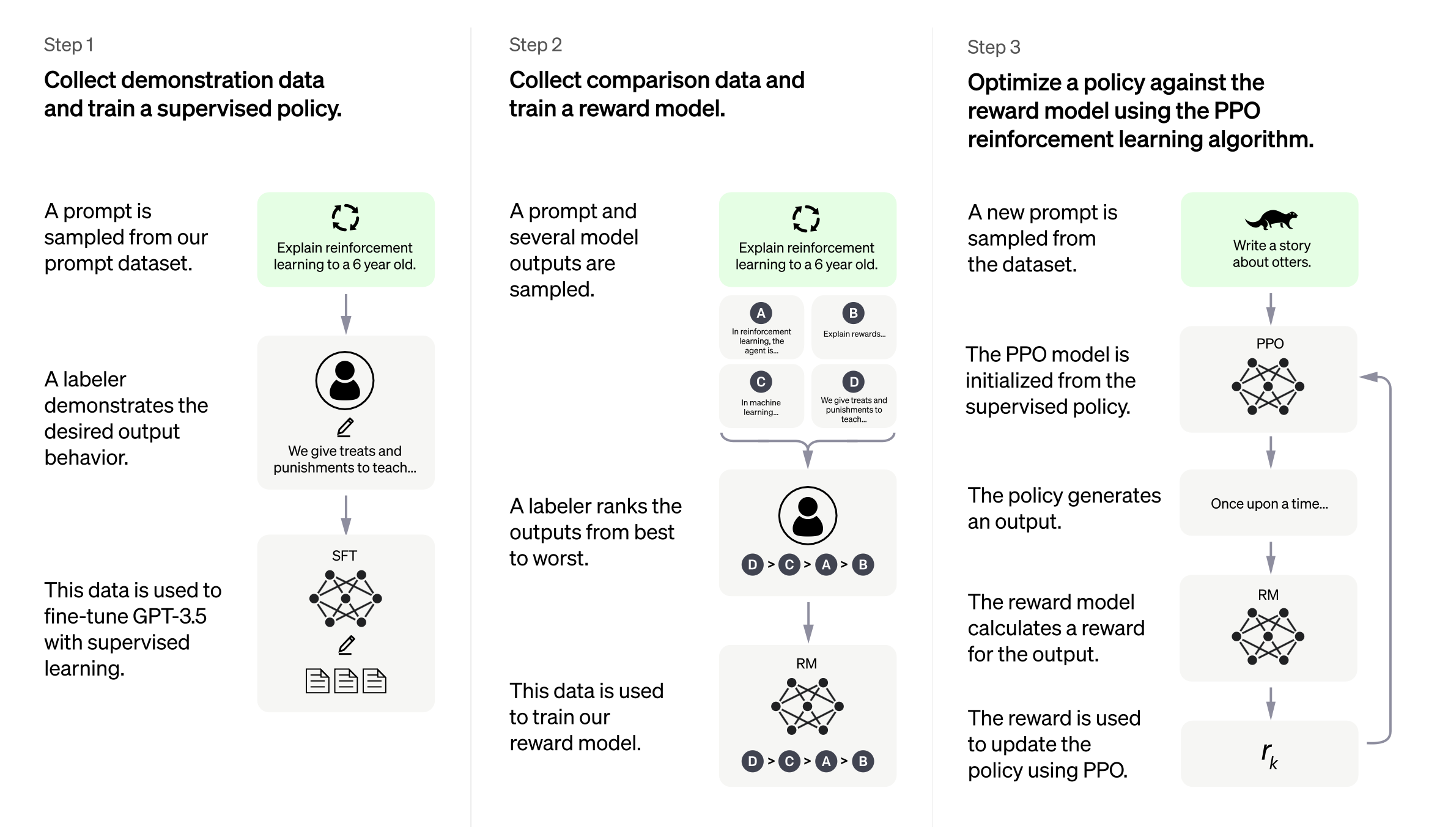

The collection of data and reward model in ChatGPT are two key components of the model’s training process. In order to generate human-like text and engage in natural conversations, ChatGPT must be trained on large amounts of data and be able to learn from the feedback it receives.

To collect data for ChatGPT, the model is exposed to a wide range of conversational data, including transcripts of real-life conversations, dialogue from books and movies, and other sources of conversational text. This data is used to train the model and help it understand the structure and patterns of natural language.

The reward model in ChatGPT is used to evaluate the model’s performance and provide feedback on its responses. This is done through a process known as reinforcement learning, in which the model is rewarded for generating responses that are relevant, appropriate, and human-like, and is penalized for generating responses that are irrelevant or nonsensical. This feedback helps the model to learn and improve its performance over time.

In summary, the collection of data and reward model are key components of ChatGPT’s training process. The model is trained on large amounts of conversational data, and uses reinforcement learning to evaluate its performance and improve its responses.

Why are policies important for ChatGPT?

Policies are important for ChatGPT because they help to ensure that the model behaves in an appropriate and ethical manner. In the context of conversational AI, policies are sets of rules and guidelines that dictate how the model should respond to different inputs and situations.

Policies can help to prevent ChatGPT from generating inappropriate or offensive responses, and can ensure that the model behaves in a way that aligns with the values and standards of the organization or individuals using it. For example, a policy might dictate that ChatGPT should not generate responses that are sexist, racist, or otherwise discriminatory.

Policies can also help to ensure that ChatGPT is used in a responsible and ethical manner. For example, a policy might dictate that ChatGPT should not be used for malicious purposes, such as spreading misinformation or harassing individuals.

In summary, policies are important for ChatGPT because they help to ensure that the model behaves in an appropriate and ethical manner. By defining rules and guidelines for the model’s behavior, policies can help to prevent inappropriate or offensive responses, and can ensure that ChatGPT is used in a responsible and ethical way.

What are the pitfalls of ChatGPT?

The pitfalls of ChatGPT include the potential for the model to generate inappropriate or offensive responses, the risk of bias and discrimination, and the potential for the model to be used for malicious purposes.

One of the main pitfalls of ChatGPT is the potential for the model to generate inappropriate or offensive responses. Because ChatGPT is trained on large amounts of data, it is possible that the model may be exposed to offensive or inappropriate language. If this language is included in the model’s training data, ChatGPT may generate responses that are offensive or inappropriate, even if it is not intended to do so.

Another potential pitfall of ChatGPT is the risk of bias and discrimination. Because the model is trained on data from the real world, it may inherit the biases and prejudices that are present in this data. For example, if the model is trained on data that is predominantly from a certain demographic group, it may generate responses that are biased towards or discriminatory towards other groups.

Finally, another pitfall of ChatGPT is the potential for the model to be used for malicious purposes. Because ChatGPT is capable of generating human-like text, it could be used to spread misinformation or to harass or deceive individuals. This could have harmful consequences, and it is important to ensure that ChatGPT is used in an ethical and responsible manner.

In summary, the pitfalls of ChatGPT include the potential for the model to generate inappropriate or offensive responses, the risk of bias and discrimination, and the potential for the model to be used for malicious purposes. It is important to carefully consider these potential pitfalls and to develop policies and guidelines to prevent and mitigate them.

I heard rumors, is ChatGPT producing wrong answers?

It is possible that ChatGPT may sometimes produce results that are wrong but sound right. As a large language model, ChatGPT is capable of generating human-like text and engaging in natural conversations. However, because it is trained on data from the real world, it may inherit the biases, prejudices, and errors that are present in this data.

For example, if ChatGPT is trained on data that contains misinformation or false information, it may generate responses that are incorrect but sound convincing. Similarly, if the model is trained on data that is biased or discriminatory, it may generate responses that are unfair or offensive, but that sound reasonable or logical.

Therefore, it is important to carefully monitor and evaluate ChatGPT’s responses, and to provide the model with high-quality, accurate data to train on. This can help to prevent the model from generating wrong answers that sound right, and can help to ensure that ChatGPT is used in an ethical and responsible manner.

How is ChatGPT related to ELIZA effect and AGI?

ChatGPT is related to the ELIZA effect in that both involve the use of artificial intelligence to simulate natural language conversation. The ELIZA effect is a phenomenon in which individuals may attribute human-like qualities to a conversational AI system, even if the system is not capable of genuine intelligence or understanding. ChatGPT is a large language model that has been trained on a wide range of conversational data, allowing it to generate human-like text and engage in natural language conversations. However, like all AI systems, ChatGPT is not capable of true intelligence or understanding, and its responses are based on the data it has been trained on and the algorithms that govern its behavior. Despite this, individuals who interact with ChatGPT may be susceptible to the ELIZA effect, and may attribute human-like qualities to the model.

ChatGPT is also related to artificial general intelligence (AGI), which is a term used to describe the hypothetical ability of a machine to understand or learn any intellectual task that a human being can. While ChatGPT is a powerful and capable language model, it is not capable of AGI, and is not capable of understanding or learning any intellectual task that a human being can. ChatGPT is a specialized AI system that has been designed for a specific purpose — natural language processing — and is not capable of the general intelligence that is characteristic of AGI.

In summary, ChatGPT is related to the ELIZA effect in that both involve the use of AI to simulate natural language conversation, and individuals who interact with ChatGPT may be susceptible to the ELIZA effect. ChatGPT is also related to AGI in that it is a powerful and capable language model, but it is not capable of the general intelligence that is characteristic of AGI.

Summary

ChatGPT is a large language model that has been specifically designed for conversational AI applications. It has been trained on a wide range of conversational data, allowing it to understand and respond to a wide range of inputs in a natural and engaging way. Prompts are used to provide ChatGPT with a starting point for generating text, and the model is evaluated and improved through a process known as reinforcement learning.

However, ChatGPT is not perfect, and may sometimes generate responses that are incorrect or inappropriate. The potential for ChatGPT to produce wrong answers is one of the pitfalls of the model, and is something that should be carefully considered when using the model for conversational AI applications. Additionally, the use of ChatGPT may be subject to the ELIZA effect, in which individuals may attribute human-like qualities to the model, even if it is not capable of genuine intelligence or understanding.

Overall, ChatGPT is a major step forward for AI and is a powerful tool for conversational AI applications. However, it is important to carefully monitor and evaluate the model’s performance, and to provide it with high-quality training data. It is also important to develop policies and guidelines to ensure that the model is used in an ethical and responsible manner.

Further Links

Introducing ChatGPT by Cassie Kozyrkov

https://medium.com/@kozyrkov/introducing-chatgpt-aa824ad89623

The Batch by DeepLearningAI — Andrew Ng on LLM

https://www.deeplearning.ai/the-batch/issue-174/

AI Snake Oil on why ChatGPT is a bullshit generator

https://aisnakeoil.substack.com/p/chatgpt-is-a-bullshit-generator-but