This summary is based on another post: An introduction to ChatGPT written by ChatGPT

While testing out ChatGPT for some weeks now, i found that texts created by it are often repetitive and monotonous. In this post i tried to condense the meaningful information from the other post.

2022 was the year of generative AI. Generative AI refers to machine learning algorithms that can create new meaning from text, images, code, and other forms of content. Leading generative AI tools are: DeepMind’s Alpha Code (GoogleLab), OpenAI’s ChatGPT, GPT-3.5, DALL-E, MidJourney, Jasper, and Stable Diffusion, which are large language models and image generators.

Especially ChatGPT(Generative Pre-trained Transformer) by OpenAI(an artificial intelligence research laboratory), the latest in AI language models had serious media attention in the last weeks. Derek Thompson wrote in The Atlantic’s “Breakthroughs of the Year” for 2022, that ChatGPT as part of “the generative-AI eruption” that “may change our mind about how we work, how we think, and what human creativity really is”.

ChatGPT is based on GPT3 (Generative Pre-trained Transformer 3) from OpenAI, a large language model that was trained using deep learning. Large language are large language models that attempt to generate human-like text and can be used for a variety of natural language processing tasks, including language translation and question answering.

How does ChatGPT work?

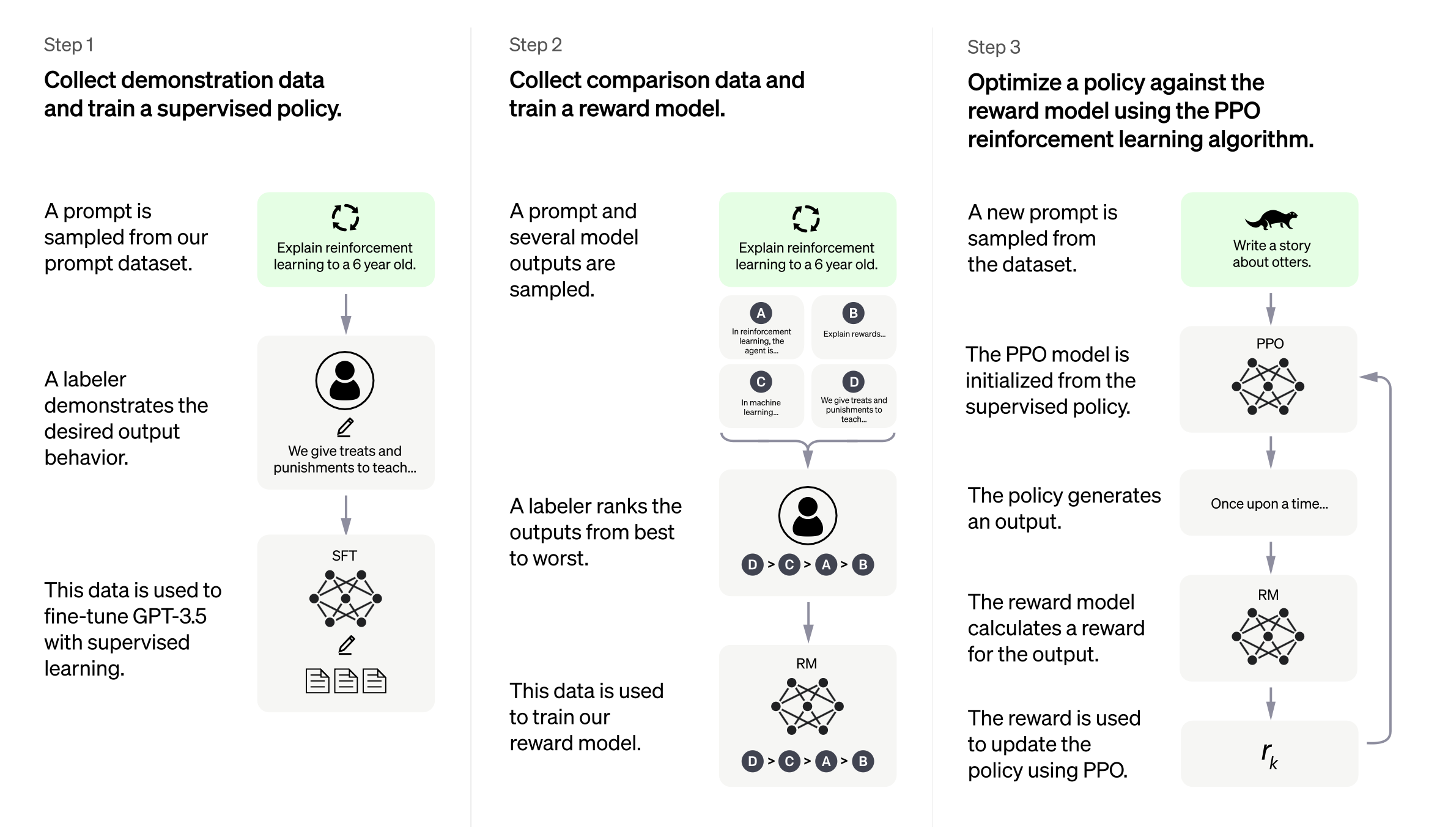

The success of ChatGPT is based on training with human feedback, the so-called reinforcement learning on human feedback. This is also one of the biggest differences to previous language models. In the latest ChatGPT version, OpenAI incentivizes the feedback process in order to get even more feedback data, and sees RLHF as fundamental to artificial intelligence that takes human needs into account and thus for the development of further AI systems.

-

To collect data for training ChatGPT, the model is exposed to a variety of conversational data, including transcripts of real-world conversations, dialogues from books and movies, and other sources of conversational text. This data is used to train the model and help it understand the structure and patterns of natural language. In addition, the training is enriched by human responses and thus tuned.

-

The reward model in ChatGPT is used to evaluate the model’s performance and provide feedback on its answers. This is done through a process known as reinforcement learning, in which the model is rewarded when it generates responses that are relevant, appropriate, and human-like, and penalized when it generates responses that are irrelevant or nonsensical. This feedback helps the model learn and improve its performance over time.

-

Policies help prevent ChatGPT from generating inappropriate or offensive responses and can ensure that the model behaves in a way that is consistent with the values and norms of the organization or the people using it. For example, a policy could dictate that ChatGPT may not generate sexist, racist, or otherwise discriminatory responses.

What are the limitations and pitfalls of ChatGPT?

ChatGPT is not capable of real understanding, and its answers are based on the data it was trained on and the algorithms that drive its behavior. ChatGPT is unable to really handle the complexities of human language and conversation. ChatGPT is not intelligent.

It is trained to generate words based on a given input without the ability to really understand the meaning behind those words. That means any answers it generates are likely to be superficial and lack depth and insight. When using it, you also notice a repetitive and monotonous language. There are already models that are able to distinguish between text generated by a GPT model and text written by a human.

If the model was trained on data that is biased or discriminatory, it can generate responses that are unfair or offensive but sound reasonable or logical. Therefore, it is important to carefully review and evaluate ChatGPT’s responses. Because the model definitely generates wrong answers that sound semantically correct. It is therefore important to ensure that ChatGPT is used in an ethical and responsible manner.

What brings the future?

The entry of AI models like ChatGPT into normal everyday work will become reality in the coming months to years. Microsoft - shareholder of OpenAI - is already thinking aloud about using the model in Bing or Office products. Despite some problems, they still have enormous potential to speed up and improve daily work.

Another concrete example is Github Copilot. Copilot is powered by OpenAI Codex, another model of OpenAI. Codex is a modified production version of GPT-3. The Codex model is also trained on source code and specifically aims at generating program code. The Copilot beta ended in 2022 and you can already significantly improve your code generation workflow.

ChatGPT is an impressive development in the field of artificial intelligence, and marks a media milestone, but is only a snapshot. GPT4 is the next generation of the GPT language model and will be even more powerful than GPT3. GPT-4 consists of 170 trillion parameters compared to GPT-3’s 175 billion parameters. The accuracy of the models will therefore increase further in the future and will continue to find their way into our everyday lives.